This is arguably a pretty lukewarm hot-take, but I slept for 12 hours last night after bombarding my brain with brain stuff (and beer, and Philly foods) for 4 days straight, and finally felt like I could string together coherent words again. No promises, though.

Immediate post-conference thought: there were a lot of man-buns, and one Philly cheesesteak was enough for me.

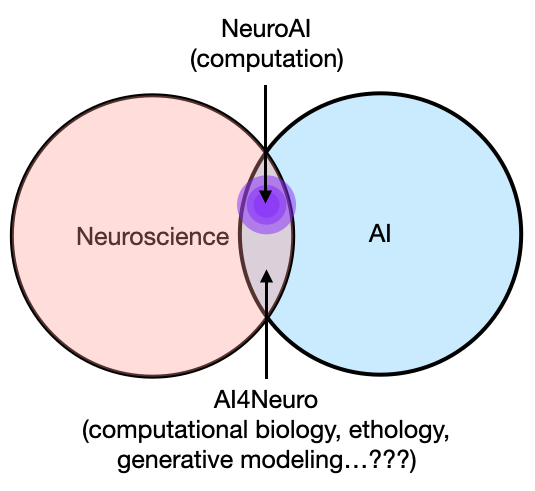

In all seriousness, this was my first CCN and I had a lot of fun in Philly. The smaller scale of the conference and the organizers’ effort to emphasize socializing made the experience a lot more personal, especially compared to SfN. It was eye-opening learning about all sorts of things that I’m not immediately exposed to, though there was way too many deep neural networks for my personal taste. That being said, I really hope this conference continues for years to come and crafts an identity complementary to NIPS and COSYNE. Here are some thoughts in 4 sections:

- personal favorite talks:

- Alison Gopnik, Ryan Adams, Dani Bassett, Ugurcan Mugan

- thoughts on the mind-matching session:

- awesome idea, would love to see this evolve. Even randomly matching would be fun, with some suggested ice-breakers.

- on the panel debate and what question should we be asking ourselves:

- spoiler alert, it’s not “when would we be done with neuroscience?”

- thoughts on the research presented (poster and talks) as a whole and suggestions for the future:

- too much deep learning ~ brain and not enough LFPs, oscillations, dynamical systems, and embodied cognition/computation.

Some favorite talks

I enjoyed Alison Gopnik’s opening keynote, because it injected the conference with a good dose of experimental & developmental psychology but also drew from learning algorithms, nicely rounding out the Venn diagram in CCN. She posed the question of why it would be beneficial for animals with higher adult intelligence to have such a long developmental period with relatively poor cognitive abilities. The punchline is that, instead of thinking about the long developmental period and playing as defective or yet-to-be matured intelligence, that we should think about it as the exploration phase during reinforcement learning (explore vs. exploit strategies), or the high-temperature phase during simulated annealing. In plain English, it means children mess around and choose suboptimal choices not because they’re stupid mini-adults, but that our species’ brains had evolved such that it values trying out different options even if with it comes a short-term cost. She went through some experiments that I won’t repeat here, but the takeaway is that children were quicker in learning rules in lab games (e.g., Blickets game) that would otherwise contradict learned experiences of, say, an undergrad or an adult.

The talk appealed to my intuition on how children behave and gave a plausible reason from the perspective of evolutionary biology, and it has potential implications on early education and parenting. My biggest question here is: what is it that the children are actually learning during this period of high-temperature annealing and hypothesis-space exploration? All the experiments demonstrated that they were able to flexibly learn constrained game rules during childhood, and suppose it generalized to real-life problems, it’s not clear how humans would benefit from this period of learning unless they were able to take some of the principles into adult life, and I’m not sure what those principles are. One plausible idea is that children, during play/exploration, learn that making mistakes is sometimes good, and the memories of these rewarding mistakes could carry into adulthood such that it motivates them to do “noisy gradient descent” as a policy during adult life, in order to avoid locally optimal solutions. If you don’t learn this as a child, you might eventually learn this as an adult and end up packing up your life during a mid-life crisis. Too real.

Side note: it was awesome that she made a point to ask the women in the audience for questions first, setting up the tone for the rest of the conference, and indeed there were some great questions.

I liked Ryan Adam’s keynote as well, as it was a very digestible overview of deep learning and probabilistic graphical models for those of us that are not in the thick of things in ML. Tim Lillicrap’s talk was the complement of this, meaning a lot of mathematical details for a specific learning algorithm, namely, combining reinforcement learning with an indexable memory element. Both talks appealed to neuroscience at a very cursory level (e.g., sparse memory in the hippocampus), and focused on the advances it made in ML, which I didn’t mind because they were educational for an outsider trying to understand the state of the art in this related but tangential field. One theoretical takeaway that’s consistent with the rest of the conference was that people are really looking for modularity and structure in learning algorithms, as the dense and homogeneous architecture of most neural networks simply do not give us the interpretability we want as humans, especially those that want to understand some aspect of the brain as well. At the end of the day, scientists like low-dimensional abstractions as a measure of understanding, and I tend to agree here.

One of my favorite talks was a contributed talk by Ugurcan Mugan, who argued that animals who could see farther (i.e., those that have bigger eyes and live on land) have a better ability to plan in a long term fashion, and therefore developed a better ability to plan in a long term fashion for survival (continuation of this work). If you’re a fish that lives in the sea - an environment with very low visibility - there’s no point for you to be able to predict what will happen 20 meters out because you will never acquire the information required to update those plans. Note that this argument only applies for vision, and it’s possible that it will break down when you account for other senses, like olfaction in sharks. Nevertheless, it’s a great example of considering environmental factors AND our physical hardware when taking into consideration the task the brain has to solve in the context of the only thing that matters during evolution - survival. Another exciting element about this work is the potential implications about memory: space and time is inseparable, and if you can see further, then you need to plan further in time. And if you need to plan further in time, it stands to reason that you need to remember further back in time. It’s a conjecture, and I’m not well-versed enough on the embodied cognition literature to know whether this is old news or a dumb idea, but it would be cool to artificially extend the physical range of an animal’s sensory organs (arm reach or visual range) and see whether it will impact it’s ability to consider information at longer timescales. Maybe we can prevent climate change by circumventing the literal shortsightedness of human beings and give everyone VR goggles that display what’s going on in a 10km radius.

Dani Bassett’s concluding keynote was simply masterful. I know very little about network science, but she went through a comprehensive body of work in the lab, starting with human learning of graph structure from sequential sampling, to graphs in the brain and how cognitive flexibility relates to dynamic flexibility in brain networks. Aside from the science, I loved the structure of the talk, as well as the transitions with quotes from poets and historians. If nothing else, her talk serves as a great template on how to give an entertaining science talk, which is hard to do as the ending keynote of an intense 12-hours-a-day conference! My lingering question from this talk is the role of subcortical neuromodulatory structures on graph control in the brain. She talked about how certain nodes in the (brain) graph had better controllability by electrical stimulation, and it would be interesting to see what native mechanisms the brain has for this type of large scale control, especially when switching tasks. I think we call this thing “attention”, and it’d be incredibly cool if we could infer subcortical projection of norepinephrine or dopamine neurons based on a node’s controllability.

On mind-matching and socializing

I really appreciated the mind-matching session. I think it’s a fantastic new idea for conferences, especially if it’s ever implemented at larger ones like SfN. Aside from being matched with people in their own labs, which seemed to be the main complaint, it was just a great opportunity to meet people. To be honest, I think whether being matched with someone in or outside my subfield doesn’t really matter. Getting matched with someone outside my field meant learning something new and establish potential collaborations (which I think was the intention), and getting matched with people in my own field meant geeking out over similar advances and problems.

Let’s face it, scientists are not the greatest at socializing (myself included), and one of the most valuable things at a conference is meeting people (shout out to Grace Lindsay for organizing the Twitter meet up! Even though I didn’t actually end up meeting you…) By the looks of how many people signed up and vigorously participated in the mind-matching, people really wanted to talk to each other, but perhaps it’s just difficult to get over the initial awkwardness of making the first introduction without any real reason to. Poster sessions are great for socializing, but obviously it’s restricted to people doing similar things, though sometimes I will literally go to a random poster that’s empty just to talk to people. Also, having this session relatively early in the conference was also a great idea, because it allowed for repeated interactions afterwards just by virtual of bumping into each other over the course of the next few days. Shout out to Jason Kim, who I matched with and later waved me over to introduce me to a few other Bassett lab members at the poster session.

I think one potential improvement, aside from not getting matched with people in the same lab, is to provide non-science related starter topics. Or maybe science questions at a very high-level, but not too vague so there can be some concrete discussion. The point is just to make a real connection with someone else, over whatever thing you have in common, and sometimes talking about our specific academic interests may feel a little restrictive and forced, especially after doing the elevator pitch 6 times in a row. Some easy ones:

- if you weren’t a cognitive/computational neuroscientist, what would be your dream job?

- who is your celebrity doppleganger?

- what are your top 3-5 non-science related books?

- what are the things you spend the most time doing, other than science?

On the panel discussion and debate

I didn’t love the “Challenges and Controversies” discussion panel, and it’s because I wasn’t sure what the purpose of such a discussion is, particularly with regards to the question of “What would it mean to understand the brain?” I don’t NOT like the concept of such a discussion. I do. I was at CNS earlier this year where Jack Gallant really got into it with Gary Marcus (at the expense of Eve Marder and Alona Fyshe, which is a shame), and if nothing else, it was thought provoking and entertaining. I liked all 3 individual short talks prior to the discussion: each of their preferred research program is interesting and answer complementary and important questions. I think the problem in this case was that the debate was too agreeable to inspire new ideas (or to be entertaining), but at the same time, nobody really conceded ground with regards to their commitment to their individual methodological and philosophical ideologies. I’m not saying the discussion has to be rude, which, to the panelists’ credit, it wasn’t. But perhaps it would’ve been better to play the devil’s advocate a little? At a conference like this, I think everyone understands the value of interdisciplinary and complementary approaches, so it’s no surprise that nobody claimed “my way is the best way”. But perhaps it would’ve have been better to force that particular line of discussion, maybe by constraining the question, e.g., “if we had only a billion dollar left to invest in brain and cognition research over the next 25 years, who should we give this money to and why?”

Which brings me to the second point: “when will we be done neuroscience” is not a good question to ask neuroscientists. Why? Because I can tell you the answer in one word: never. Tal Yarkoni wrote a great blog post very recently on this topic of “understanding”. The job of scientists, by definition, is to perpetually ask questions about the thing they are studying, both because that’s what they love doing, and because that’s what they’re paid to do. Modern academia makes it seem like the role of scientists is to answer questions, and that’s partly true, especially in clinical and engineering contexts. But I would argue that the most important thing a neuroscientist does is to ask (good) questions. If that’s the case, the search will never end, as long as a single neuroscientist is alive and still wants to be paid to do what they do. We’ve been doing physics for 400 years, and we still don’t “understand” the world/universe/atoms/anything at 100%. I would say “we” understand the world, because F=ma and I don’t fear for my life whenever I drive across a bridge, but a physicist would find that notion of understanding hilarious and incredibly inadequate. Along that vein, someone had a great comment at the end of the debate (anonymous shoutout because I forgot his name), which was something along the lines of “I would say I knew how a car worked, until my car stopped working and I had to go to my mechanic.” Incidentally, I’ve been in several Uber rides where, upon telling the driver what I do for a living, they responded with “don’t we already know how the brain works?” Maybe I happen to stumble into cars with especially educated Uber drivers, but I really doubt that.

So the real question here, I think, is “who or what should we prioritize the understanding of brain for”, which can also be reformulated into the above constrained optimization question, i.e., if resource was extremely limited, what’s the minimally viable product we, the neuroscientific community, should aim to provide? Let’s just cut the shit for a second and honestly ask ourselves that question, and I think for a lot of us, the answer would be “ourselves”. Because we are curious and it’s a great job and I would love to ask and answer questions about the brain and tinker with cool toys and computer simulations for the rest of my life, and there’s no shame in that. But if that’s the case, the answer to the original question is clearly “never, or for as long as I’m alive.” But let’s step outside of the neuroscience bubble for a second: many of us have written grants claiming that our science may improve understanding and treatment for those with neurological and psychiatric disorders, which is not false, because in the limit, all these small pieces of knowledge do indeed contribute to the answer. But if we’re truly aiming to help patients as quickly as possible, the immediate best thing to do is probably say “fuck understanding the brain”, and come up with therapies and interventions that would improve behavior and quality of life - for example, DBS. Another practical example: should understanding of the brain equate to successful manipulation of behavior? If the latter is the goalpost, then we should also stop doing neuroscience and start working for Facebook because folks out here are already manipulating important behavior, and neuroscience really has nothing to offer to counter that threat.

Okay, mini-rant over, but I think these are problems we should think seriously about as a community, because that money river is not going to flow forever, nor should it, and theoretical neuroscience might be the first one to dry up.

On the conference content as a whole

In terms of the scientific content as a whole, personally, I felt that there were too many “deep learning ~ brain” comparisons. I’ll preface this with my acknowledgement that I might be on the Hatorade because I don’t work in this field, and if I did, I’d probably find it to be just right, so I’m sorry if I am offending any individual work - please excuse my ignorance! It’s not to say that I find the deep learning and brain comparison uninteresting - quite the contrary, I think there are a lot of interesting ideas to work with here, both in terms of improving architectures with known biological constraints, as well as understanding the brain with the help of a (more or less) functional brain model. For example, the contributed talk by Bashivan, Kar & DiCarlo was very insightful in terms of how we can use neural network models as a testbed to make novel predictions about the brain in processing visual stimulus, which they then confirmed with in-vivo experiments and even worked out independent cell control with visual stimulus! Imagine that, SfN can finally stop sending me emails about optogenetics workshops (joking but also please stop).

My complaint here is two-fold. First, I felt that this line of work, on average, lacked explicit acknowledgement of the goal. Is it to build a better artificial system by including biological realism, or is it to understand the brain better with a model, or both? I’m not saying the whole field has to subscribe to one or the other, but I think individual works should be know where it sits. And because many weren’t explicit about it, and this is perhaps due to a lack of intimate understanding of the background on my part, a large part of the conference felt like “let’s take this wet squishy thing we don’t understand and correlate it to this dry hard thing we also don’t understand.” Like the above work I cited, if we’re interested in the theoretical link, then I think the true value here is to learn something we didn’t know about the brain by using predictions from deep learning models. It’s really fascinating that certain aspects of deep neural nets mimic the real brain, and perhaps we’re just on the initial wave of mining the similarities, but if we just talk about algorithmic similarities, I’m sure you can find aspects of SVM or even linear regression where weights mimic neural activity in some tasks, but it would be a mistake to say that we’re learning something about the brain.

My second complaint, which may also have contributed to my philosophical disagreement, is simply the fact that (in my personal opinion) there were just too many posters & talks on this topic. Again, not saying any of them were uninteresting or undeserving, but that I would’ve liked to see more of other things in computational or cognitive neuroscience. One of those things being, obviously, population dynamics and computation observed in LFP and ECoG, and the role of oscillations or high-gamma/multi-unit activity in computation. As far as I know, there were 3 posters on oscillations - one on spontaneously emerging alpha oscillation from a predictive coding network, one on behavioral oscillation in the theta range in a memory task, and the other being my own. There were a handful of other ECoG/EEG/MEG works, but those, while all fascinating in other ways, simply used those signals as an observable. Other than that, and this sounds like a stupid thing to say about a cognitive and computational neuroscience conference, but I felt like there was too little representation of works not focusing on the brain. The talk on visual range I mentioned above being the exception, I think there is a lot of interesting work on computational ethology and embodied cognition that wasn’t here, especially in the area of simulated or physical robotics given the current capabilities of the state of the art deep learning architectures. Maybe this is the West Coast cognitive science Kool-Aid talking, but I’d hate to see CCN fall right back into the “brain is a computer” hole that the previous iteration of cognitive science fell into.

That’s all for the conference reflection, hope everyone there had a great time. Comments are still down but would love to know what you thought of it on Twitter. And lastly, here’s some vibes to shake off the edge from all that excitement: