Uhh so this whole thing got a bit long, and I thought about breaking it up into parts, but I just can’t be bothered to make two different posts and tweet it multiple times, so just use the table of contents above to jump to what’s interesting/relevant for you. The 3 sections are more or less standalone. Either that, or get a refill of whatever you’re drinking and buckle up.

At the risk of trying to replicate my own success (but also to revive this blog), I will attempt to summarize some of my own takeaways after attending Cosyne 2022. You should by no means read this as an “objective” survey of the topics and trends at the conference, but rather views through a very filtered lens, partly because I just didn’t go to as many of the talks (lol), so the coverage will not be anywhere close to being complete (is that even theoretically possible?). This is not just a product of me skipping talks, though. I’ll try to convince you later that this was (at least in part) by design. But also, in the 3 years since Cosyne 2019, a lot of things have changed. While the scientific community is still struggling to claw back towards some kind of in-person normalcy, the upside is that most of the conference material exists online in one form or another, even the poster. More importantly, they exist in a systematically-curated and accessible form, in no small part thanks to efforts from the organizers, but also to community initiatives (like World Wide Neuro) and crazy individual efforts, so I don’t feel so compelled to do that.

The biggest difference between 2019 and 2022, though, is that I’m 3 years older, having existed as a fly on the Cosyne auditorium wall for 3 years longer, and somehow finding myself to be partially integrated into this community. Actually, the most interesting thing I took away this year was in fact this particular piece of “meta-perspective”, and it manifests in several concrete ways:

First, my view of the science that was presented at the conference is now less of a point observation, but one of a noisy trajectory or gradient. This shows up implicitly in the reviewer biases in which abstracts were accepted (in terms of what’s “in” and “out”), which is also something the organizers needed to be aware of in constructing the conference program, which they briefly talked about in the opening remarks. The exercise of revisiting and writing up my notes really helped me consolidate what I took away from the main conference, and I will raise the disclaimer again that this in no way represents a “true” reflection of the conference themes, but my filtered version, so check out a few of my personal favorite themes in Section I.

Second, after 7 years of going to conferences, it finally occurred to me in Lisbon that I don’t (explicitly) know how to conference. There are a lot of conventional wisdoms floating around about how to do conferences, and sometimes specific instructions for specific conferences, most of which about how to prioritize watching talks vs. going to posters vs. socializing. A lot of that is helpful, but I think it dawned on me that nobody really ever gave me systematic instructions on how to think about this, and that probably most people didn’t get this as a part of how-to-science manual either. In Section II, I will continue to not give instructions, because there is no one-size-fit-all advice: actually it’s different for different people, at different conferences, and most importantly, at different stages of career. At the same time, it seems to be a shared experience that people often have the feeling that conferences are overwhelming, exhausting, and guilt-inducing. I will write a bit about this realization, and make some meta-suggestions for how to conference—in particular, setting goals that are appropriate for your interests, career stage, and personality.

Finally, beyond passively participating in the conference program, I had the good fortune to actively shape a small part of it by co-organizing a workshop with Roxana Zeraati on neuronal timescales, along with a line-up of fantastic speakers (and cool people). This was obviously a stressful and intense experience, especially on the day of, but it was also exhilarating and insanely productive scientifically. Not only that, I felt like I was able to connect with the people there—as human beings—in a much deeper way than I was able to while just chatting at a poster, or casually meeting up as a part of socializing at the conference (especially being a relatively introverted person). In Section III, I will give a (not so) brief report of the discussions we had on “Mechanisms, functions, and methods for diversity of neuronal and network timescales”, as well as some reflections about my experience as a workshop participant and co-organizer.

Section I. Highlights from Main Conference

I took notes for some talks and posters. They’re not very good notes. To be honest I’m not even 100% confident I left with the correct takeaways, I did go back to check some parts of the talks to consolidate when possible, but you know what they say: the best way to learn the correct thing on the internet is to write it wrong (so please feel free to correct me if I misrepresented something).

Cosyne is no SfN, but the volume and breadth of cool works is still incredibly high—bordering overwhelming—and everything looks interesting and at least tangentially relevant to the broader theme of neural dynamics and computations (at Cosyne? wow dude who would’ve thought), so it’s quite hard to pick which talks and posters to not go to. In the end, I basically give up and attended them when there was nothing else I wanted to do more. Afterwards, I looked through what interested me the most based on which of the talks I happened to be at that inspired the most thoughts, and they mostly fell into the following (unsurprising) categories (though I had to do some shoe-horning for some).

(Links typically point to recordings of that talk or the online version of the poster, when I could find them, otherwise poster numbers are in brackets)

I love “weird” stuff

Hands down, what I enjoyed the most are talks that are quite different from the “classical” Cosyne stuff, and I’m very grateful that the organizers decided to include a broader set of topics for the talks. Nothing against PCAs & ANNs, it’s just that the bandwidth (i.e., new information per talk) is much higher for talks on weird stuff I’ve never thought about before. I guess I enjoy the feeling of hearing about ideas that could fundamentally change how I think about something, or just ideas that are so completely unfamiliar to me that it triggers a novelty reward. Of these, I want to highlight 2 talks and a poster:

-

Asya Rolls talked about the similarities and differences between the nervous system and the immune system, how they both make “memories”, and how memories in these two systems interact. She showed results from some super interesting experiments, and her talk is online, I highly recommend checking it out because it’s quite accessible as an outsider to immunology. The insight in a nutshell is that these two systems face similar environmental demands, in that they have to adapt to novel situations, especially situations where remembering how to optimally act might save your life the next time around (think a tiger vs. a novel pathogen). Like a lot of people, I got my Immunology degree from Twitter after COVID vaccines dropped, but never did I think about how the brain might be involved in the immune response. Among their crazy results: chemogenetic activation (via DREADD) of dopaminergic neurons in the VTA leads to a more potent lymphocyte response, which (I think shown in a separate experiment) can trigger a pathway through the bone marrow (?!?), resulting in proliferation of cells that can kill tumor cells more effectively after VTA activation. In plain English: when your “reward system” is activated in coincidence with an immune response to a foreign pathogen, the immune response to the same intruder is stronger the next time. On the flip side, you could have an allergic reaction (which is a “rogue” immune response) upon holding fake flowers if you have an allergy to real flowers, just because your brain recognizes it. A quite relevant example is “phantom COVID symptoms”, where knowing somebody who you were in contact with that tests positive will immediately make your throat tingle (though no causal claims are made with respect to this)—this literally happens to me every other week. Lastly, she showed that this neuro-immune link is quite specific, where ensembles of neurons in the insular cortex (or, insula) that were active during initial infection can trigger a similar immune response when artificially activated, but not via non-specific activation of neurons across the insula. This is literally the insular analog of memory engrams in the hippocampus and amygdala (see Josselyn 2020, shoutout to the 6ix). This really brought home the message for me that we should rethink what “placebo” means, because anything that the brain “sees”—or “thinks it sees”—could cause a very real bodily response, and her works explore the extent to which this is true.

-

Another talk I really enjoyed was Susanne Schreiber’s: it’s not really “weird” at all in the context of neuroscience, just mind-expanding in how she bridges between fundamental biophysics to large-scale network dynamics. In a lecture about single-neuron dynamics, you’d typically learn that neurons are classified as Type-I or Type-II depending on how their output firing rate scales with input, and, importantly, that this is a static characteristic of a neuron. Roughly speaking, Type-I neurons have a continuous transition from no-firing to firing, hence (theoretically) being able to fire at arbitrarily low firing rates given a weak enough suprathreshold stimulus. Type-II neurons, on the other hand, have a discontinuous jump: at some point when the stimulus gets strong enough, they go from not firing at all to firing at a much higher rate. Apparently, there’s a third type of firing pattern called homoclinic action potentials, which is a state-dependent mixture of Type-I and Type-II, and what Susanne talked about is that neurons can switch from being Type-I or Type-II to homoclinic due to a variety of factors, and this seemingly small single-neuron change can have big consequences for network synchrony. In particular, temperature and pH—two things that aren’t typically included in models neuronal dynamics—can trigger such a change (in addition to extracellular concentration of some ions), and the resulting network (hyper)synchrony can resemble pathological states like epileptic oscillations. To me, this should be a textbook example of how biological and physiological mechanisms can impact brain function through altered neural dynamics, and you can check out the talk from the link above.

-

To wrap up this section on weird stuff, Chaitanya Chintaluri gave a poster presentation on an idea so wild that I think it’ll either be a Science paper or forever-bioRxiv (no disrespect at all, and I told him this on the spot). If you’ve ever worked with in-vitro cultures, or are familiar with fetal neurodevelopment, you’ll likely have encountered the fact that networks entirely isolated from inputs often still have (quite large) network activations, i.e., network bursts or “avalanches”. It’s a strange and very robust phenomenon, and you see this anywhere from primary cultures, stem cell-derived planar or spherical networks, as well as slices. On one hand, it’s not that crazy to imagine that a couple of neurons might spontaneously fire, and when the connectivity is right, the small handful would trigger a large network response until exhaustion, and the cycle repeats itself after some neuronal or synaptic adaptation time. On the other hand, you can ask a simple yet perplexing question: why do these neurons want to fire, especially considering the fact that action potentials are quite energetically costly? Chai’s proposal was that getting rid of energy, specifically in the form of excess ATP, is precisely the reason why neurons want to fire, because accumulation of ATP in the neuronal mitochondrial is apparently toxic. There are a lot more details in the biology that he’s worked out, and heavy-duty stuff like free radicals, Reactive Oxygen Species, etc. etc., and they built a computational model to reproduce this effect. I have no idea if this will turn out to be true, but it goes along with my pet conspiracy theory that action potentials are not for communicating with other neurons, but rather stems from a neuron’s intrinsic and uncontrollable urge to seek relief from some kind of electrochemical pressure state.

Neural Manifolds Plus (TM)

What’s a Cosyne blog post without some neural manifolds? Dimensionality reduction remains the workhorse of computational neuroscience, not only in neural data, but now also in high-dimensional behavioral tracking data. I think it’s fascinating that there is now essentially an entire subfield that not only uses dimensionality reduction methods, but works towards building a theoretical framework to explain these results. It feels a bit circular if you assume PCA came first, but if I look back beyond the last 5-10 years, it’s clear that latent variable models of neural population dynamics, as a theoretical construct, have always been a main topic for the community of people at Cosyne. It’s just that it used to be the case that both the development and application of these methods—I’m thinking GPFA, (Poisson) LDS, etc.—were reserved for the specialists and the computational collaborators, but now many more people at least have the opportunity to apply PCA or UMAP out the box from Anaconda. In any case, it’s basically impossible to cover all the works on this topic in depth without a dedicated blog post (like Patrick Mineault’s), so I simply have a couple of examples that I thought were interesting, either on the method front, or using it in some way to append existing theoretical understanding of computations in the brain.

-

One of the emerging themes in the realm of dimensionality reduction is to use models that combine continuous latent dynamics and discrete state transitions to learn a hierarchical representation of neural data, from which the discrete states can then be mapped to annotated or learned behavioral states/motifs. You’ll see this throughout this blog post. A classical example is a bunch of (local) linear dynamical systems (LDS) with between-state transitions defined by a (global) Hidden Markov Model (HMM). Adi Nair talked about a similar approach of using recurrent switching LDS, and I always find it interesting when these kinds of methods are applied to weird subcortical areas, in his case, the hypothalamus. I know close to nothing about the hypothalamus, but I guess the traditional view is that specific ensembles of (ventromedial) hypothalamic cells encode for specific and non-overlapping sets of actions related to aggression and mating (like sniffing, mounting, etc.). This wasn’t really the case in their data at the single-cell level, so the next best hypothesis is that cells with mixed selectivity coordinate to form a population code (like a humpy/bitey PFC), and they use a RSLDS to model the neural dynamics within and across discrete states. He showed, surprisingly, that there is a mixture of hand-annotated actions in all the switching states, so they don’t have representations at the level of discrete actions, but some contextual state. Or mood, if you could call it that in a rodent. And because it’s a bunch of LDSs, you can do linear system analysis, and he showed that each of the latent linear dynamical state spaces (i.e., the linear dynamics matrix) has a long-timescale(!) integration dimension, and that the proportion of aggressive actions scaled with the time constant of that integration dimension, like some kind of rage meter.

-

The hippocampus is another system for which there is a prevailing theory (or dogma, depending on who you ask): surely everyone knows about the Nobel Prize-winning discovery of place cells by now, and it’s known that when the animal is placed in a different environment (same physical arena but with different sensory cues), those place cells still have place fields in the new environment, just at different locations compared to the old one. The prevailing theory of “remapping” proposes that those place cells essentially get activated by different sensory inputs in the two different environments, hence the “places” they represent are different. In plain English, it’s like each place cell in the hippocampus is an index card (like in the library), and when you take the stack from one library to another, you can rewrite the code on each individual card—independently—to point to a different section. André Fenton proposes quite a different and fascinating hypothesis based on neural manifolds in the hippocampus. If I understand correctly, his theory of “reregistration” goes like this: instead of place cells with independent degrees of freedom in their place representation, most place cells actually participate in the same population code that’s stable across different environments, which means that their co-firing (or correlation) structure are important and preserved to form this theoretical neural manifold. In some sense, this population dynamic just happily trucks along the on-manifold trajectory, as it does, no matter what environment the animal is in. How the remapping or re-regrestration comes in is through a small handful of anti-cofiring cells, which transforms or “projects” (in a loose sense) the stable population code differently in different environment. Extending the index card analogy, it’s as if most index cards have just a single and permanently printed code, while a few of the rest make up the codex that tells you how to interpret the same code in different libraries, e.g. under one filing system (say, in Canada), the number on the index card takes you to the non-fiction section, while under a different filing system, the same code takes you to the comic book section. He showed tons of experiments and analyses, and I’m not confident I’ve completely digested the link between the conceptual level and the specific analyses, but he uses Isomap—an old school nonlinear dimensionality reduction technique—to form these manifold representations, and I wonder how many of our neuroscientific conclusions would be changed if different (and more complex) dimensionality reduction techniques are used in practice.

-

Another interesting thread of investigation is, of course, the mechanisms behind the consistently observed low-dimensional neural manifolds. Renate Krause looks at this in vanilla recurrent neural network models trained to perform some simple classical tasks, which are good model organisms because they exhibit two puzzling characteristics: first, the trained neural dynamics are (linearly) low-dimensional, often characterized by the small number of principal components required to explain most of the variance in population rate; second, the trained network weights are usually much higher dimensional, measured both by the number of PCs required to capture total variance as well as impact on task performance when removed, which means that there are many directions that the weight matrix can project the activity onto. So the question is, how are the dynamics kept to be low-dimensional while the weight matrix can push it around in many directions? To bring harmony to these two contradicting observations, she defines a new concept of “operative dimension”. I think the gist of it is that, instead of considering the global weight matrix, she takes points along the low-dimensional neural dynamics, and finds directions in the weight matrix that will produce the largest local change in activity if removed. Through this procedure, she finds that it is possible to extract a low-dimensional connectivity that shapes the low-dimensional dynamics without performance loss, you just have to look in the right way. This is quite complementary to the strand of work that starts from low-dimensional connectivity via, for example, low-rank constraints, and it’d be really interesting to see if they somehow converge onto the same learned connectivity or operative dimensions.

Unstructured behavior

You really can’t understand the brain without trying to understand the behavioral demands animals face, so I’m pretty happy that Cosyne continues to move in a direction that includes more behavioral work, especially unstructured behavior. Similar to what I said about neural data above, you can learn some pretty interesting continuous and discrete dynamics when looking at the behavioral data alone, and it’s even more cool when they are linked to low-dimensional representations in neural data. In many of these algorithms, the heavy-lifting is done by nonlinear transformations and embeddings, i.e., deep neural network, instead of PCA. You might sacrifice some interpretability, but the nice thing about behavioral data is that you can always look at the learned sequences or motifs and make sense of it just by looking at or listening to the data.

-

Bob Datta started off the Cosyne talks with an absolutely stunning keynote, centered on a tool his lab developed, MoSeq. I don’t even want to write much about it, because I’d just do the talk a disservice. And really, you basically don’t need any prior knowledge in anything to understand and enjoy most of the talk. But in a nutshell, MoSeq is a method that models time series data, whatever modality it comes from, and when applied to videos of free behavior, it automates the segmentation of behavioral “syllables”, or short snippets of stereotyped actions. This is in contrast to the hand-labeling approach of having some poor grad students sit in front of the computer all day annotating when the animal is doing what, on a frame-by-frame level. Besides bringing sweet, sweet relief to the hand labeler, the unsupervised algorithmic approach does it with perhaps a little less labeler bias, deciding on motifs purely based on statistics. That is not to say that the algorithm is free of bias and human intervention, since you presumably still have to pick hyperparameters like number of syllables to extract (or do so via cross-validation). But once you have meaningful syllables or motifs, you can do a lot of interesting things with them, such as characterizing individual motif statistics like duration, as well as the transition structure between motifs that make up longer sequences of behavior. You can also ask how interventions like giving mice drugs change their motifs and transitions, as well as their neural correlates. Bob talks about all the above, the talk is fucking awesome, and I can’t imagine how many grad student-hours went into making these snazzy figures. In the end, he speculated about how these syllables may be “atoms” of behavior, and I think it’s a fascinating thought that deserves careful consideration. Obviously, the algorithm decides the form and duration of these syllables, and statistically, it’s always a trade-off between generalization vs. precision, i.e., how different does a snippet have to be from the rest to be considered an entirely different syllable? Furthermore, you can always split an atom, and you can construct things from atoms, and when animal behavior is involved, I definitely wouldn’t bet my money against the possibility that individual variations in syllables are context-dependent. In other words, maybe there is an average canonical “sniff”, but how individual sniffs differ may be a function of what the animal was doing right before, or the larger context it’s in, not just random noise. Anyway, highly recommend just watching the talk, and I’m really stoked about this sort of stuff, it’s right in the mix with embodied cognition, and would be especially great if we can move it into the wild.

-

Unaffiliated but very much related, Yarden Cohen deals with the same high-level problem of segmenting continuous time series into meaningful “syllables”, except the syllables here are of a totally different kind. Yarden studies songbirds like canaries, which can apparently produce extremely long and beautiful songs (check the video for a very entertaining example). Similar to behavioral videos, you can ask expert annotators to look at a song spectrogram and segment motifs by hand, or use machine learning approaches to do it automagically—enter TweetyNet for birdsong segmentation and classification. From well-segmented syllables, one can ask analogous questions of how syllables transition from one to the next to make up songs, as well as their neural correlates. All my questions about behavioral motifs stand here, though you might imagine birdsong syllables to have less (meaningful) variability from instance to instance?

-

Wherever brain and cognition are involved, you could potentially set up a contrast about discrete symbolic computation vs. continuous dynamical systems. Not that this view was (implicitly or explicitly) championed by any of these speakers, and they all eventually have a mixture of both perspectives, but Heike Stein gave a really interesting talk that delved more into the discrete motif (or state) and explicitly described the dynamical system. Instead of condensing high-dimensional data to a priori unknown low-dimensional embeddings or syllables via unsupervised learning directly, she first uses DeepLabCut to just get the motion trajectories of the 4 paws of mice running on a little laddered wheel throughout the course of a motor learning task. The mice are (quite literally) stumbling over themselves, one foot over another, when they first start trying to run on the wheel. Over time, they somehow learn the phase offset relationship between one paw and another that’s required for a stable gallop. She models the paws with coupled oscillators that can oscillate at 3 different speeds, where stable movement is achieved essentially when the oscillators are locked to the same speed (but with a half-period offset). The different frequencies and coupling are instantiated by—you guessed it—a Hidden Markov model that transitions between different (discrete) states, where the synchrony of the paws are different between these states, some of which are desireable for smooth running on the wheel. In other words, it’s a switching rotational dynamical system. You can start to see the high-level similarities between the methods employed by these very different subfields of computational neuroscience. Anyway, I’m always a fan when somebody explicitly writes down a set of dynamical equations, and even more so when it can start to capture something as complex as animal behavior with coupled oscillators.

Bringing biological realism into models of computation

I don’t know if this is going to be a “trend” at Cosyne, but I think injecting biological realism into models of neural computation should be a hot topic moving forward, even if challenging. We have lots of great models of neural computation in deep neural networks (DNN), but as great as they are in reproducing performance on visual and other tasks (recent Twitter debates notwithstanding), they don’t possess much biological realism aside from the rough preservation of anatomical hierarchy (e.g., through the ventral stream)…and the “neural network” in their name. Yes, I know there was a whole BrainScore workshop on representational similarities between DNNs and the brain, but that’s more about outcomes of models, not really introducing more structural elements into the model a priori, or biological inductive biases, if you want to call it that. And that’s not to say those works are not interesting or valuable, it’s certainly puzzling why some architectures can produce real neuron-like activity. But coming back to my point here, I personally find it important to also ask how including biological realism (e.g., local connectivity constraints, cell-type specialization, excitation and inhibition, etc.) can not only alter task performance, but more so, how these features unlock and/or constrain the types of computations a network can perform and how.

-

One of the most obvious biological details missing from the currently popular models of brain computation is action potentials. We don’t have to go down the spike vs. rate rabbit hole here, that discussion needs a book (or periodic Twitter debates). But assuming you care about spikes to start with (your brain obviously does), I think it’s interesting to ask how spiking networks can implement learnable computations like rate-based DNNs do. That’s why I was pretty excited that Dan Goodman gave this year’s tutorial on spiking neural networks, because these things certainly influence what people—especially junior researchers—then remember and find interesting moving forward, consciously and subconsciously. I’m not saying a linear integrate and fire neuron has all the biological details we need, I just think it’s a lot closer to how a real neuron works than a dot product and sigmoid. In the tutorial, Dan goes through some basic spiking neuron models, as well as some fancier stuff you can add like adaptive spike thresholds. I work with Brian2 regularly, so I’m familiar with SNNs as mechanistic simulations, but it was very cool to learn about surrogate gradient descent (quite clever) and training spiking networks in pytorch to do tasks, especially recasting the same coincidence detection problem to be solved by delays vs. weight matrices. All the material is online, and you can follow along with his recording, highly recommend.

-

Adaptation (e.g., spike frequency adaptation) is another effect largely missing from DNN models of the brain, and I saw a really cool poster from Victor Geadah (2-41) that incorporates this into rate-based recurrent neural networks. Basically, instead of a fixed activation function for the neurons in the network, they have a flexible activation function from a family of parameterized functions, and those adaptation parameters are controlled by an internal RNN. It’s a bit of a yo-dawg situation, because each unit in the big RNN has a small RNN inside that controls its adaptation, but the detail doesn’t really matter here, the point is that now every unit in the network has a flexible and dynamic activation function that changes over time as a function of the input, as well as the cell’s own state and that of the network as a whole. The cool part is that even though the whole thing is trained end-to-end to maximize performance on some sequential tasks, the network ends up mimicking adaptation seen in experimentally measured neurons. I think he also compared to the condition where each unit’s activation is learned, but fixed over time, and finds that the dynamic adaptation is better. I found a preprint online, but I think the poster had more. I think this could potentially have a really nice link to gated RNNs in machine learning (e.g., LSTMs and GRUs), and can unlock questions about potential mechanisms of controllable adaptation, both internal and external to the neuron (like neuromodulation).

-

Another example of bringing realism was Mala Murthy’s talk (speaking on behalf of Ben Cowley), where she really took biological correspondence to the max when comparing DNNs to a circuit in the fly brain. The talk is unfortunately not online, so I know I will butcher it since I can’t revisit it, and I would’ve really liked to. But the gist of it is that, since the fly brain is quite manageable in size and already well-characterized, where many neurons are even individually named like in C. elegans, you could actually build a DNN model with one-to-one correspondence for some of the neurons. You can imagine churning through the whole DNN-is-brain pipeline here, training the network on task alone (where the input is what the fly sees and output is its task-related behavior, I believe) and compare activation in the matching real vs. artificial neurons, and that would already be cool. But they take it to a whole different level: because those neurons are individually identifiable, you can do single neuron knock-out via optogenetic(?) inactivation, and see how shutting off each neuron impacts the fly’s behavior. Then, you can mimic that whole procedure in the artificial network by simply ignoring the activation of those corresponding neurons while asking it to match the behavior of the minus-1-neuron fly. I think this approach is super cool, and definitely starts to move beyond simple correspondence towards causality. If anything, it gives us more confidence that the derived DNN is a more faithful model of the real thing we’re trying to reproduce.

Humans, oscillations, and mechanistic modeling

I complain about the lack of representation on oscillations and humans at Cosyne all the time, so it’s only right to give credit where credit is due. This year, I was genuinely surprised about the number of presentations on human electrophysiology and fMRI, as well as mechanistic modeling without task constraints, and there was even a whole session of talks on neural oscillations!!! Every time I passed by a poster that had something to do with one of these areas, I did just the tiniest fist pump-another win for the team. I ended up noting down quite a few interesting ones, but I don’t want to describe them all in detail, so here’s a lightning round to close up the scientific programme:

-

Michael Long gave a fantastic invited talk on dissecting the neural substrate of human language understanding using invasive electrophysiology, i.e., electrocorticography (ECoG). As far as the intersection between human cognitive neuroscience and computational neuroscience goes, this is as good as it gets. Through some clever tasks and free conversation, he shows functional specificity of different brain regions for different phases of communication (presumably based on their high gamma response): auditory areas for perception, motor areas for speech production, and—what he focuses on—mid-frontal regions for “planning”. These regions are not for generic planning involving verbal instructions or sound production, but really specifically for language understanding. He even showed some causal perturbation data where electrically stimulating these planning areas results in some really interesting errors in the participants’ response—and this is just half of the talk. The other half looks at data from some really cute singing mice, also with causal manipulations to boot. Really nice talk, and probably one of the few times you will see linguistic theory in a Cosyne talk. As a side note, the task he uses would definitely be a good one to deploy on RNN-based language models, like the ones Vy and Shailee presented on in our workshop (if you end up reading that far).

-

Anna Shpektor talked about hierarchical representation of sequences in the human entorhinal cortex, with fMRI data (gasp of horror). Sharing the Nobel Prize with the aforementioned place cells in the hippocampus, the entorhinal cortex (EC) has grid cells, which are thought to be the basis functions of the downstream dirac delta-like place representations. There are two prominent observations about grid fields (is that what they’re called? Like place fields?): first, they can emerge in sequential tasks that don’t necessarily have to do with space, thus leading to the interpretation that they are important for representing generic sequential structures in some platonic concept space; second, grid cells are anatomically hierarchical, meaning that a grid cell’s grid field resolution depends on that cell’s position along the dorsal-ventral axis of the EC. Anna asks if the union of these two observations is also true: do abstract sequences that have some temporal hierarchy, e.g., from letters to words to sentences, also have representations in the EC that fall along the front-back axis (anterior-posterior for the weird upright human)? Long story short, she finds this to be the case, and presents great data in a super engaging talk. But as beautiful as her science was, the ending of her talk was one of these things that I will always remember, even when I’ve forgotten all about the results: as a Russian-born scientist, she spoke out against the war in Ukraine, and used this opportunity to highlight some organizations that were helping refugees from, as well as people still, in Ukraine. Here’s a Google Doc compiling some of the main ones, and you can check the video of her talk for some more. I don’t want to dwell on this too much because her science deserves recognition on its own, but seeing this live—even though it reminds us of the real and ongoing tragedy—somehow brought a weird sense of normalcy (and only then did I notice the yellow and blue stage lights throughout the talks), perhaps because we still acknowledged and cared about the world outside of the bubble.

-

Okay, lightning-round for real now: on the experimental side, Jacob Ratliff talked about the role of somatostatin+/nNOS+ cortical inhibitory neurons on network synchrony. It’s always interesting to learn more about cortex-wide synchrony (and the associated low-frequency oscillations) during low-arousal states of the animal, and Jacob presented some cool data on the causal role of these genetically identifiable and long-range inhibitory neurons. Optogenetically activating them while the animal is in an alert state (with desynchronized network activity) will synchronize the pyramidal neurons (at 4-6Hz) and put the animal into a quiet state. I think that’s quite remarkable, I didn’t expect such a small and niche cortical population to have such a global effect, which I tend to think is a role reserved for subcortical nuclei. Ana Clara Broggini (3-32) really tested the idea of neuronal resonance by entraining V1 neurons with optogenetic stimulation and seeing their input-output function, as well as how downstream V2 neurons respond. Surprisingly, she finds that V1 neurons don’t have gamma range narrow bandpass filtering properties (as previously reported) when input is directly injected via opto, and that entrainment is most effective at lower frequencies. This seems consistent with the fact that global synchronized states usually exhibit low frequency oscillation, and also calls into question the role of gamma in interareal communication (i.e., communication through coherence). Joseph Rudoler (1-52) and Mila Halgren (1-118) both had posters looking at the 1/f exponent in power spectra of human intracranial electrophysiology recordings, and I’m very much here for this (and secretly hope Cosyne will one day be taken over by weird 1/f stuff).

-

On the modeling side, Shiva Lindi talked about some heavy duty mechanistic modeling work on how the corticostriatal system generates beta oscillations. Tour-de-force exploration of both rate and spiking neural networks, and as per the norm with this type of work, lots of model parameter research is required to produce simulations that match experimental data (would be mighty nice if somebody could build a machine learning tool to assist with this kind of model discovery…). Natalie Schieferstein (1-120) uses spiking neural network models and mean-field approximations to study ripple oscillations in CA1, proposing a novel inhibition-driven mechanism that can explain some peculiar observations behind sharp-wave driven ripple frequency drifts—neat because she uses the empirically observed phenomenon as a criterion for model selection. Julia Wang (3-45) had a poster on using variational autoencoders to detect low-dimensional and interpretable latent states from LFP and EMG during sleep, which nicely correspond to awake, slow-wave sleep, and REM sleep. Really nice approach and something I will definitely draw inspiration from. Lia Papadopoulos talked about her work on modeling the effect of arousal on the (metastable) dynamics of clustered networks. If you imagine separate neural population attractor states to be encoding different stimuli (like different sounds), then perceiving these stimuli accurately over time requires the network to quickly switch between these “metastable” states. Lia proposes a beautifully intuitive explanation for how arousal essentially lowers the energetic barrier between these states, such that optimal (de)coding is achieved at moderate arousal, when the network is at a balance between coding flexibility and fidelity. Finally, Merav Stern, also working with Luca Mazzucato, talked about how heterogeneity in neuronal timescales can arise from clustered networks. As a perfect segue into the next section of this blog post, she describes a potential mechanism that could explain the often-observed distribution of timescales across cortical circuits, which is simply to have a network with a distribution of cluster sizes. Really cool work, and since I just found it for myself, here’s the preprint.

Section II. Meta Thoughts on Conferencing

This was my third Cosyne, and the first in my full year now as a postdoc. You’d think I know what’s going on by now, and in some sense, I do: show up, get overwhelmed by the firehose of scientific content (especially the midnight posters man, damn), try to go out and socialize as much as possible, try to wake up before mid-day, rinse and repeat. Like I mentioned, there are lots of conventional wisdoms that get passed down about how to do conferences, ranging from “don’t go to the talks, posters are the only place where you get to engage” to “conferences are about drinking and meeting people”. Most of these wisdoms are conditionally true, meaning that they are true if you, the attendee, satisfy some criteria. These criteria vary depending on your career stage and goals, your personal interest, your personality, and more. Imagine telling a first year PhD student without a concrete project that “conferences are for networking”, that would be a bit bizarre—even if you made lots of good contacts, what would you do with them? Even though I would certainly not do this now, I had some great times as a PhD student going to literally every event on the program at SfN and Cosyne and then just going to bed at a reasonable time, but that would certainly not suit my career stage now (and also I just don’t enjoy it).

Maybe I’m the only idiot with this problem, and it boils down to not knowing myself well enough, but I feel like whenever there is a schedule and a mob involved, I just default to following the schedule and the mob, and the thing about conferences is that the schedule runs from 8am to 8pm (or midnight…), and some subset of the mob is drinking or doing other fun stuff from noon to 4am. It’s literally impossible to do it all, and even if you somehow managed, your mental and physical health are probably rapidly deteriorating. I know a handful of people who somehow have the energy to drink till 4am and show up to SfN morning posters at 8am, and I tip my hat to them, but I’m not that guy. I actually used to get sick after every conference trip, because the immune system probably quit by the time I get to the airport, after 5 days of intense mental and physical exhaustion. But if I didn’t do it all, I always felt bad about not taking full advantage of this scientific opportunity, and simultaneously experiencing fomo while everybody skipped the afternoon and explored the conference city. It’s lose-lose-lose. So yeah, in some sense, I’ve been around long enough to get what’s going on now, and I survived the years of passively getting pulled into various currents and then feeling bad that I didn’t get pulled into a different current, I just don’t really enjoy it anymore.

If you are one of these people that know exactly what you want to get out of a conference and don’t care about anything else on the schedule or who’s doing what, then stop reading now, because it’s going to be obvious. And just to be clear, I don’t think I’m offering any groundbreaking insight here, just hindsight common sense. In fact, I’m not even offering much insight about what to do at a conference, all I’m saying is that, if you’re some kind of passive completionist like me, like one of these people that would go through every branch in those choose-your-own-adventure books, this entirely overwhelming endeavour could be a much better experience when you approach it from a different perspective, and the key is realizing that, like many aspects of life, you simply cannot do it all, and in order to not exhaust yourself and feel like a failure, you have to adjust your expectations and set priorities.

On the flight to Lisbon, I miraculously had the lucidity to realize that I’m not a PhD student anymore (it’s like waking up from one of those nightmares about missing an exam), and that maybe I should approach this thing differently? Then I quickly realized, I don’t know how to approach it differently because I hadn’t been approaching conferences with an intention at all. So I quickly jotted down some goals / priorities. My number 1 priority was to enjoy my time there, which meant giving myself the permission to take a timeout anytime I needed, including from other people, and try to maintain at least some of my daily routines at home, like meditating, stretching, and short workouts everyday. I managed to do this on about half the days, and I certainly didn’t enjoy every single day of the conference, but overall, I am way happier about the fact that I didn’t completely get wrecked by the 5 days and managed to keep some semblance of (and also took advantage of some local and conference hotel amenities…). Other than that, I had some “conference science” goals, like learning 3 cool / unexpected things everyday, as well as something directly relevant to my projects. The want for the latter is obvious, but the former is what I actually really enjoy about big conferences, i.e., learning about stuff that I would otherwise never seek out. Section I of the blog post is the result of such attempts. I had roughly similar goals for “networking”, but with the addition of meeting some people that I really get along with but not necessarily have any overlaps scientifically, aka anti-“networking”. I won’t go through all of them, but you get the gist. If I was a starting PhD student, I would probably prioritize seeing as much stuff as possible just to get maximum inspiration about what’s going on in the field. As a postdoc, you have to balance that with optimizing for future opportunities, both in and outside of academia. I’d imagine as an established PI that don’t need too many new directions or opportunities for collaborations, you’d just want to catch up with people that you don’t get to see often, and maybe talk to some journal editors. I was pretty lucky that my poster got rejected and I only had to give a very short intro for the workshop, otherwise all of this is moot because I’d probably be skipping everything to prepare my poster last minute.

Anyway, the point is just that it’s important to balance science, socializing, and rest / self-care, and this is definitely not in the “Science for Dummies” manual. All three are important and can come in different forms, and one naturally wants to prioritize the first two, being at a conference and the first in-person one in ages, but it’s really important for myself to not burn out and just be cranky all the time. At the same time, the fomo is real: it’s hard to say no to science or partying when I just need some alone time, but it’s important to distinguish fomo from “I actually really want to be there”. With some explicitly defined priorities, it turned the same objective experiences from being guilt-inducing stressors to feeling like I’m actually accomplishing stuff on my checklist. Wow I just rediscovered the glass-half-full parabole. At this point, I’m still trying to figure out a mode that works for me. It helped a lot to have defined some concrete goals at the beginning, I didn’t meet all of them, but I made an effort, and it shaped how I consciously approached the thing.

To close, I’m gonna stream of consciousness a bit, and also talk about how strange of a social custom conferences are. Thousands of people show up at the same place, and for the next 5 days or so, everything within this bubble is all that matters. This is obviously a lot of fun and scientifically important, but sooner or later I get this inescapable feeling that I don’t care about much of this stuff, and none of it matters anyway, especially when you zoom out to a larger context of global events, like the ongoing pandemic and a full-on invasion in Ukraine, in addition to the mundane daily things that we deal with in our regular daily lives. I don’t mean to be disparaging to people who can just focus on the science at the conference, in fact, I’m envious, because I came all this way here and there are so many people and learning opportunities around me, why not enjoy it for what it is? But it was also really great to then talk to people who felt very similarly, and we can have a laugh about the quiet absurdity of this whole thing. Maybe I’m not super commited to my science, or science in general, but I feel like when I meet people, I almost never want to talk about science as the first thing. I don’t know why, and it’s really weird because, obviously, it’s a contextually relevant conversation starter. It’s not that I never enjoy talking to people about science, not at all. But I guess it’s a lot easier when I already know them a bit as people.

To push this even more, I think a key objective at conferences is not necessarily to find relevant science, especially in an age where everything is online. In fact, there’s too much stuff online and too little time to consume it all. All the talks are streamed, and most posters are online and curated, with preprints to boot. I think the key is to meet people you enjoy spending time with, who are on the same wavelength and with whom you might then have fun working or talking shop. For me, this often manifests as quiet real talks in a loud place, around some beers, but it’s different for different people—figure out what you enjoy. I also had a great time surfing (aka getting completely bulldozed in a storm) with some strangers, and then got a nice lunch and chat afterwards about completely random life stuff. Of course, as a dude with no real life responsibilities that enjoys drinking beer and getting turnt, I’m pretty lucky that a lot of other scientists also like doing that, so “networking” sometimes happen spontaneously when you end up completely shitfaced at a latenight soup restaurant. As someone who doesn’t enjoy that, you’d probably have to work a bit harder, but a nice lunch and stroll is always possible, especially if you can open up and connect without being lubed by alcohol. I thought the conference app where people self-organized events like climbing, birdwatching, and surfing was a great addition to make the casual networking a bit more equitable.

And with that, onto neuronal timescales!

Section III. Workshop Report: Mechanisms, functions, and methods for diversity of neuronal and network timescales

This whole workshop-planning thing started quite spontaneously: I saw the call on Twitter maybe a week before the deadline, retweeted into the void and tagged Roxana to see if she would be willing to do this crazy thing together, and 4 months later, we’re in Cascais, sitting at the front of the meeting room as co-organizers in front of some dope speakers.

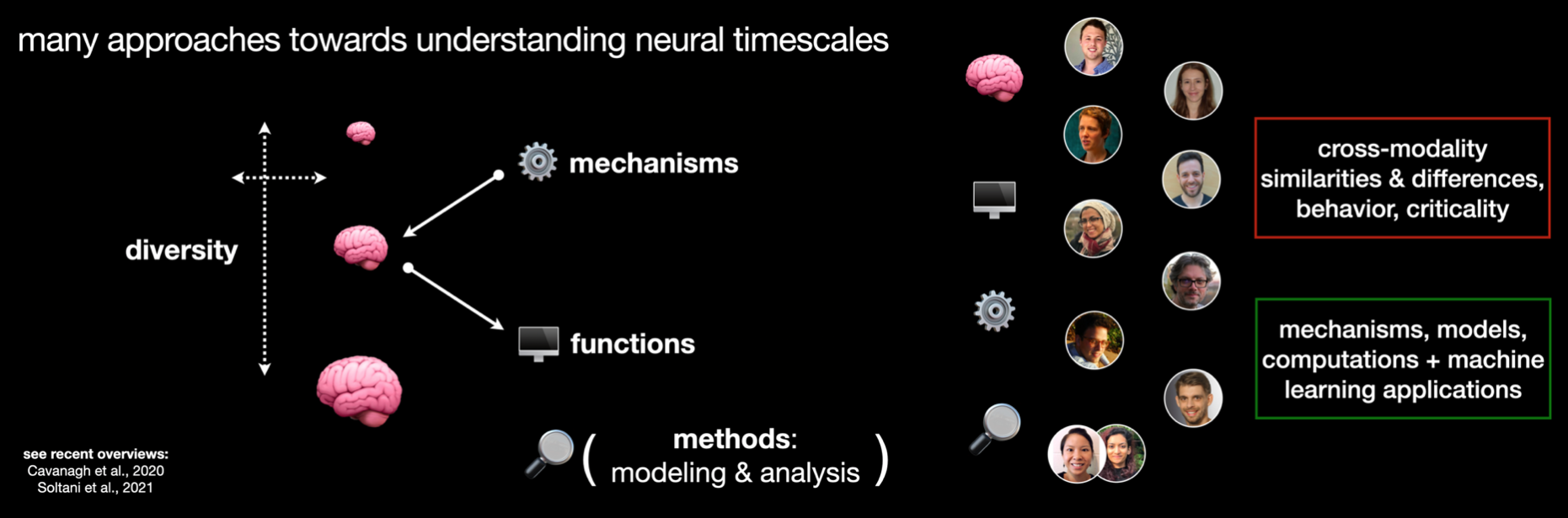

Note: this slide was from the introduction I gave, updated with our two heroic speakers that subbed in on the day of, circled in green.

Note: this slide was from the introduction I gave, updated with our two heroic speakers that subbed in on the day of, circled in green.

I never imagined myself organizing a workshop, much less at Cosyne. Nevermind the imposter syndrome, this just feels so…grown up? Luckily, the workshop organization wasn’t too much work after we had written up the proposal, especially with several of the speakers volunteering themselves after seeing the tweet. I don’t know if this makes the selection process more equitable, but it certainly made our lives easier. Nevertheless, we filled the rest of the roster while trying to optimize for diversity in a few different aspects, including gender and career stage, as well as to cover the huge breadth of topics that now make up the “field” of neural timescales.

And holy shit it’s a big wide field.

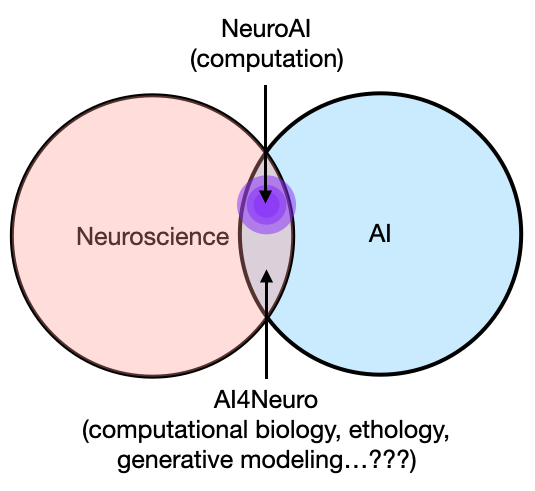

To provide a bit of context, here was our thought process while deciding on the topics, which became the workshop title:

- diversity: we are still amassing data from different brain regions in different model organisms, under different task constraints and from different recording modalities, to see just how timescales vary across space and time. Much of this data attempts to connect with cortical hierarchies (or gradients), as per Murray et al. 2014 (shoutout to the OG), but also to characterize heterogeneity more generally. Then, we chose 3 broad themes: function, mechanism, and methods.

- First, function refers to behavioral or “computational” relevance, i.e., why is a diversity or gradient of timescales useful for the organism? The cookie-cutter response that I personally always give is: well, the environment is dynamic and has many temporal hierarchies, so the brain should have the same in order to keep track of them. But this is quite vague, since it doesn’t specify where, how, and what the precise relationship between the two is, e.g., we can remember stuff from many years ago, does that mean there are neuronal dynamics whose timescale is on the order of years, or is there some kind of conversion factor?

- Second, what are the biological mechanisms that gives rise to the heterogeneity of timescales? This can include synaptic, cellular, and network factors that all mix together, which can produce unexpected behaviors. Of course, one has to be careful in linking specific mechanisms to specific observations at different spatial resolutions, which is to say, single neuron spike train timescales probably arise from a different mechanism than population-level timescales, which also differ from membrane timescales, all of which are important and potentially (casually) influence one another.

- Lastly, and implicit in all the above, are methods that drive these investigations, and that include both analysis and modeling methods. Fundamentally, are we measuring the same (biological) quantity if the algorithms we use to arrive at those final numbers drastically differ? And this is before even considering modality differences (e.g., continuous time series vs. point processes). This is something that’s good to catalogue, even if we cannot hope to be prescriptive in standardizing them. On the other hand, computational models are always a great model organism for investigating mechanisms that are infeasible to dissect in biological organisms, so how do we leverage artificial networks—be it spiking, rate, or deep RNNs—to study these same questions above?

You can probably write a whole book, or at least a 10-page review paper, to cover all the works that touch on the above in the last 10 years alone (see here and here for very relevant discussions). In the workshop, we got a chance to see some of the newest works in the last couple of years. I’m not gonna be modest about it: it was the best workshop I went to that day (but also probably in general). Not only did our speakers cover the incredibly broad spectrum of topics relating to timescales, there were such rich and unexpected intersections between works that it really felt to me like a coherent and self-organizing emergent entity. It’ll be hard to not completely butcher their findings (so feel free to correct), but in the interest of space, I will just briefly summarize the key points from each talk below, as well as some of the discussions and takeaways points we had.

Note that I mostly keep to third person throughout to avoid switching back and forth between the speaker and their team, just for convenience, but in all cases it was acknowledged just how much of a team effort it really was, from lab tech, research assistants, all the way up to the PI.

Summary of talks

-

Lucas Pinto started the day by blowing my mind with some experimental data that continues to remind me to put some respect on the Experimentalist’s name. In his virtual reality setup, mice have to make a left-or-right decision based on always-present or transient visual information as they run down a virtual track, depending on the specific task. During this, he has the ability to do focal optogenetic inactivation, but cortex-wide. This means that during any part of the mouse’s run, he could turn off any part of the brain in a systematic way to see how different regions contribute to, and what their timescales of involvement are in, e.g., visual perception (seeing the pillars), evidence accumulation (”counting” and remembering the quantity), or decision-making (recalling and acting based on the information). More concretely, the question is: if you shut down a part of the brain momentarily, how much does that screw up the mouse’s performance on the task, and for how long into the future is this deficit present for? Somewhat surprisingly, he showed that shutting off almost any part of the dorsal cortex will induce a performance deficit, suggesting that these “cognitive processes” involve multiple brain regions. However, how much and for how long the deficit lasts for depends on the inactivated brain area. Something about this systematic casual manipulation of the brain to interrogate cognitive faculties really blew me away, because you can start to disentangle, among other things, “when” vs. “for how long”, and this is without recording from a single neuron…but, of course, he also showed wide-field calcium imaging data from many cortical areas and recover a hierarchy of activity timescales. There is so much more, so you can check out the tasks here, and the main findings here, and we even got to see some super cool preliminary data on timescales across cortical layers.

-

Going from mouse to monkeys, Ana Manea showed us some ultrahigh field fMRI data from the monkey brain and related them to the classical single-unit timescales, as well as to functional connectivity gradients. I’m a huge fan of this type of work that bridges modalities, and her data showed that the spiking timescale hierarchy is preserved in fMRI, though I’m very curious what the explicit scaling factor is and whether that’s consistent between macro regions. The power of fMRI, of course, is that you can now look across the whole brain with high spatial resolution, and she sees a smooth gradient (e.g., across the dorsal visual pathway), and not only across the cortex, but in the striatum as well. This is really nice to see, but if you think about it, it doesn’t have to be this way at all, because the temporal dynamics of single-unit or population spiking could be totally different from that of hemodynamics recorded via fMRI, so I really wonder what drives this consistency. On top of that, she showed that functional connectivity gradients (a hot topic in human resting-state fMRI) are correlated with the timescale gradient, wrapping up a nice story connecting spikes, BOLD, and (functional) anatomy. Her paper with all the details is hot off the press, so check it out (it’s also super interesting to read the open reviews). One thought I had while listening to her talk the following: two autocorrelated signals tend to have a stronger pairwise correlation just by chance, and these functional connectivity gradients are typically taken as the singular vectors of the resting state BOLD covariance matrix. So how much of the functional connectivity can be expected by the signal statistics of the univariate BOLD autocorrelation alone? Beyond fMRI, she also had some spiking data from the “top” of the hierarchy, including frontal and cingulate regions, but I won’t cover that here other than to say I’m very excited to see more spike-LFP timescale comparisons.

-

From there, Lucas Rudelt, who was thankfully able to tag in for his advisor, Viola Priesemann, continued with the theme of crossing scales. He also threw the first punch, so to say, by introducing the concepts of criticality and scalefreeness. Their works start from a complementary and first-principles approach by positing a general process of activity propagation (i.e., branching process), which models how much influence a given “node” in a network (e.g., a neuron) has on its downstream nodes. This influence can be parameterized by a number called the branching ratio, or in their specific case of a neural network, neural efficacy (memory is a bit fuzzy here but I think it’s the same conceptually). Intuitively, a network with low efficacy does not propagate activity very far, or for very long, resulting in shorter timescales. You might expect the converse to also be true, that networks with high efficacy would have long activity timescales. However, among many of the results Lucas talked about, one surprising observation is that it’s a lot more complicated: when networks are set to an efficacy of near 1 (otherwise known as the critical regime), timescales can very quickly become longer, but are also more variable. In fact, it’s not great if the branching ratio is at 1, because then the propagation would explode, so it’s more reasonable to expect that neuronal networks operate slightly below criticality in order to balance information propagation and stability, and this slightly sub-unity region allows the balance to shift dynamically and flexibly. Furthermore, he makes the distinction of intrinsic timescale with information predictability, and find that timescale increases along the visual cortical hierarchy (in agreement with the original findings in mouse Neuropixel recordings), but information predictability decreases. This is quite puzzling as it contradicts the (more intuitive) notion that longer timescales would translate to higher predictability into the future, since things don’t change as quickly. You can find some examples of their work on this topic here, here, and at Cosyne 2022 poster 3-036.

-

Brandon Munn further represented the scalefree perspective with a tour-de-force overview of his PhD and postdoc works in Sydney. Well, it was both cross-scale and scalefree, in every sense of those words, as he reviewed some earlier works that span from local spiking analysis (looking at 1/f PSDs!) to macroscale modeling of the neuromodulatory and thalamocortical systems. Of the latter work done jointly with Eli Müller, he presented some really interesting results that linked cortical timescales (of fMRI signals) with matrix vs. core populations in the thalamus. Very broadly speaking, the thalamus has two types of projections to the cortex: “core” populations have precise targets in the cortex, while “matrix” populations have diffuse cortical projections. Among the many thalamus-cortex associations they find, the most topical was that the cortical gradient of timescale correlated with the level of core vs. matrix projections, i.e., regions with more matrix projections have longer timescales. This brings yet another complicating factor to the mechanisms discussion: in addition to single-cell properties and feedforward- vs. recurrent-dominated local connectivity patterns, the thalamic input may also play a direct role in shaping the “intrinsic” timescale—you might ask yourself at this point, what’s intrinsic at all anymore about intrinsic timescales? Just because monkeys and humans were not enough, Brandon also talked about some exciting new analyses he did with whole brain calcium recordings from zebrafish larvae and Neuropixels recordings from mice. Without delving into the details, he used a procedure called coarse-graining to lump more and more neurons together to see if population timescale lengthens with the number of neurons you pool together. Indeed, it does, and at the risk of putting words into his mouth, I think this raises the possibility that long timescales are maintained not by a specific neuronal population (say, from the association areas), but simply by circuits with integrated activity from more neurons. More broadly, this loops back to the idea of scalefreeness, where spatial correlations scale with temporal correlations, i.e., smaller populations, be it neurons or sand grains, sustain events of shorter durations, and vice versa.

-

To close up the morning session, Roxana Zeraati, my co-host, presented her recent works on a new method for robust estimation of timescales, as well as its application to characterize timescale changes from the monkey brain under attention manipulations. Fun story, I was asked to review her method paper more than a year ago, and that’s how I got to know her and her work in the first place, which led to the idea of co-organizing this workshop. The paper is now published (along with a nice python package, abcTau) so you can just go check it out, but briefly, she tackles the problem of biased estimation of the decay time constant when fitting exponentials to the autocorrelation function, due to various factors such as low spike count, short trial duration, etc. Ideally, we would want both a less biased estimate, as well as a quantification of the uncertainty, especially when the underlying process has multiple timescales. Her approach applies the framework of approximate Bayesian computation (ABC), which has a more modern synonym under simulation-based inference (SBI) (which, funny enough, is what I work on now with Jakob): ABC (or SBI) takes a generative model, runs many simulations with different parameter configurations, and accepts the parameters that successfully generate simulated data that matches the observed data as the “true” generative parameters. Actually, many methods fit this description, including naive brute-force search, and ABC methods essentially cast the problem into a Bayesian setting (i.e., posterior estimation) and do it in a more efficient way. I won’t bore you with the details, and she actually used most of her talk to showcase the application, I just think it’s a nice method and obviously is very related to the stuff I do now. But the empirical findings are just as nice: using abcTau, she was able to parse two timescales from single-unit recordings from monkeys doing a selective attention task. She finds that the fast timescale on the order of 5ms (membrane? synaptic?) does not change with attention demands, but the slower one on the order of 100ms does, and builds a computational model to suggest that between-column interactions across the visual cortex can explain the slow timescale change.

By this point, I was pretty thankful that it’s lunch time. If you’re counting, in these first 5 talks, we’ve had 5 different methods for computing neural timescales and even more model organisms. We also saw that timescales not only vary across the cortical hierarchy in a “static” way, but change across layers and over time (whose rate of change can also change), are related to different potential mechanisms (from connectivity to variation in thalamic projection) and cognitive processes (from decision-making to attention), and, just for fun, could potentially scale in a scalefree manner in the goldilock zone of quasi-critical dynamics—and this is just a tiny summary of the data we’ve seen so far. Good thing we got a nice lunch break together and a quick stroll on the beach, which might have been my favorite part of the workshop (more on this later). But back to the science, and in the afternoon session, we were treated with 4 talks with 4 entirely different kinds of computational models, each of which were used to study similar questions of heterogeneity, function, and mechanisms.

-

So far, we’ve talked about neural timescales as useful for implicitly tracking timescales in the environment. Manuel Beiran started the afternoon by making this proposed function very explicit, investigating the conditions and mechanisms that allow rate RNNs to not only learn examples of durations, but to generalize to unseen ones. The task is straightfoward: a network receives an input that encodes the intended interval (either via an amplitude, or a delay between two pulses), and after a Go-signal, is asked to produce a ramping output for just as long. Unsurprisingly, RNNs can learn the examples fine, and could even learn to interpolate across unseen durations within the bounds of the training examples. However, networks with full-rank connectivity have a hard time extrapolating, whereas networks whose recurrent weight matrix is low-rank could extrapolate (with some help from a context cue). Looking at the dimensionality of the network dynamics, it appears that the low-rank networks essentially keep to a low-dimensional manifold (!!) whose geometry is fixed, but the speed at which the dynamics unfold along the manifold changes for the different durations. Relating back to the overall theme, Manuel’s talk suggests that connectivity constraints could be a useful thing for networks to be flexible and generalizable in tracking time(scales), instead of falling into tailored solution for individual timescales. Note that the full-rank networks probably can find the same solutions, since they are a superset of the low-rank networks, but the latter have an easier time reaching these solutions (for whatever reason). The preprint has all the information plus more. I also have to mention Manuel’s older paper on disentangling the contribution of adaptation vs. synaptic filtering to network timescales, which I only learned about recently, but has some quite nice (and surprising) results.

-

Going from rate to spiking networks, Alex van Meegen delved further into mechanisms by providing a theory to predict single neuron timescales. I’m not gonna lie, there was a lot of heavy duty math that I basically have no hopes of understanding, probably ever. But Alex framed his talk quite intuitively, and the question he poses is deceptively straightforward: can we predict the timescale of single neuron spiketrains given neuronal parameters and network connectivity, as well as the temporal statistics of the external input? In particular, he highlighted the question of how neurons embedded in a network can acquire much longer timescales than set by their membrane time constants. The contribution of the work is that, instead of brute-forcing it numerically, he worked out a theory that analytically connects underlying parameters to observables. If I understand correctly, the dynamical mean-field theory “squishs” the network of neurons into one “big neuron” whose distribution of input and output statistics (e.g., mean firing rate, ISI, and autocorrelation) can be described by stochastic differential equations, and squishing them this way is okay because the input and output are “self-consistent” (I’ll stop here before I embarrass myself more). He applies it to several different types of neuronal models, including generalized linear models with various nonlinearities, as well as leaky integrate-and-fire neurons with different connectivity structures, and finds good agreement with simulations. All the findings are in the recently published paper, which he did not have time to fully cover in a 20-min talk. One point he stressed was that the theory only predicts the average timescale (and distribution of quantities) across all the neurons in the population, not the timescale of the average population activity, which are markedly different (e.g., Fig. 10 e vs. f in the paper). I found this particularly interesting because the former corresponds to single-neuron timescales measured via spiketrain autocorrelations (e.g., in Murray et al., 2014), while the latter resembles something more like the LFP, or more directly, summed pre-synaptic input into the neuron. But these two quantities are suppose to be self-consistent, so the synaptic or neuronal membrane filter is doing a ton of work to make this happen?

-

Nicolas Perez-Nieves, heroically stepping in remotely an hour before his supervisor, Dan Goodman (who seemed to have had just the worst luck in the days leading up to Cosyne), was schedule to talk, took yet another complementary modeling approach. In his work, he trains spiking neural networks to do a range of classification tasks with temporal structures—a difficult feat in of itself that requires a clever surrogate gradient descent technique (but check Dan’s Cosyne tutorial!). Typically, training such networks, whether spiking or rate-based, means adjusting only the connection weights in order to optimize for task performance, but not here. Nicolas asks whether heterogeneity in single-neuron parameters, specifically their membrane time constants, can improve task performance. In this context, heterogeneity means that every neuron in the recurrent network is allowed to take on a different value for its time constant, instead of all being the same. This heterogeneity is implemented either through initialization alone (and then fixed), through learning, i.e., the neuron-to-neuron weights, along with single-neuron time constants, are learned through back-propagating the task performance loss, or both. This technically sophisticated (and apparently computationally intensive) setup lead to some very intuitively satisfying results: learned heterogeneity in single neuron time constants led to better performance in all the tasks, and their distribution roughly matches experimentally observed gamma distributions of membrane time constants measured in real neurons. In addition, a small number of neurons seem to consistently acquire very long timescales, e.g., 100ms compared to the median of ~10ms. There are a lot of interesting things to consider when extrapolating from these results to the real brain. For example, neurons in the brain probably don’t (and can’t) tune their membrane time constants for each task they face, but the heterogeneity already exists, or at least changes on an evolutionary timescale—so then how is this heterogeneity taken advantage of per task? Does this help explain functional specialization of different brain areas, since neuronal (and network) properties in different regions are more or less defined after early development? They touch on both points (and more) in the recently published paper.

-

Last, but not least, Vy Vo and Shailee Jain gave a jointly pre-recorded talk about a set of very fascinating and interdisciplinary works they did on augmenting machine learning-style RNNs (i.e., LSTM) (with timescales!) to better perform language tasks, and then using them as a model of natural language processing in the brain. They start from the observation that natural languages, like many other processes in our environment, has a hierarchy of timescales (e.g., phonemes to words to sentences). RNN-based language models usually use a gated “neuron”, like the long short-term memory (LSTM) unit, which has an internal memory that decays with some time constant, which is useful for capturing long-range dependencies. Because I’m learning the German language, it’s the only example I can think of, where a question usually has the verb—a critical piece of information—at the very end. To parse a question, you would then need to “hold onto” information, like the subject and object and wheres and whens, until the very end, when it’s clear what the requested action is. In the first part, they show that when LSTM units are assigned a distribution of timescales mimicking that of natural language (i.e., power law), these “multiscale” networks result in better language modeling performance, especially for rarer words. Pretty cool already, but it gets better: they can now give the same text input to the network as what a human reads inside a MRI scanner, and compare network activation to brain activity. Specifically, they try to predict BOLD timecourse at each voxel in the human brain using a weighted combination of the activation of all the units in the RNN. Surprisingly (at least to me), they find that activations of voxels in the temporal lobe (language-y bits?), prefrontal cortex (think-y bits?), and the precuneus can be predicted particularly well. What’s more, they extract an “effective timescale” for each voxel by taking a weighted average of the timescale of the units in the RNN: if a unit is particularly predictive of the activity in a voxel, that unit’s timescale would be weighted high. Through this procedure, they find that the auditory cortex has short timescales, which increases as you move towards parietal areas (TPJ), and there’s a similar gradient along posterior-to-anterior PFC. Some natural questions arise here: how do the RNN-derived voxel timescales compare to those computed based on the BOLD ACF (like in the previous talks), and specifically, how are they different, and also how does the resting state timescale compare with these task-derived ones? Anyway, I think this is a super cool intersection of machine learning and neuroscience research (as well as collaboration between academic and industry labs), where the ML model actually benefited from a brain-inspired architecture while also assisting in explaining brain data.

A lot of questions moving forward

I think my brain was properly scrambled, or rather, has exploded and smeared the workshop meeting room after that whole day of talks (and I had said about as much in our final discussion). It’s the feeling of suddenly experiencing so many new things that one has a tough time keeping track of any single discussion, let alone how they intersect with one another. Fortunately, it seemed like I was not the only one, and people mostly shared the sentiment that this was a good thing: that the talks and discussions at the workshop generated so many new leads and potentially new perspectives that it’s hard to consolidate them into a coherent sequence of thoughts. In the two weeks following, I was able to take some time to digest these new ideas, and going over my notes for all the talks (and their associated papers) for this blog post really helped in picking up the pieces (of my brain) after the dust settled, and I find them falling into the same themes we’ve set out in the beginning. I hereby present you the pieces of my brain, which, of course, are heavily inspired by the workshop speakers and attendees, and in particular recent conversations with Matteo Saponati and Alana Darcher:

-