(Cover image source here and I honestly don’t know if Einstein even said it.)

Edit: this is a sick video that shows basic curiosity-based (strange) science leading to unexpected dividends. He actually acknowledges this at 15:35 in the video. (Thanks Connor!)

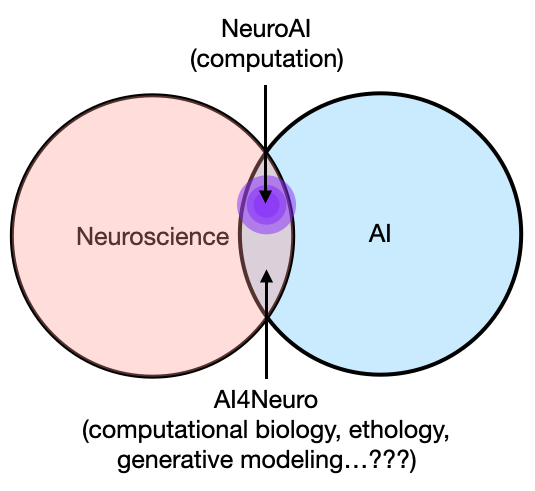

I’ve recently been thinking a lot about the social side of science, including the current state of science in North America, public funding for science, public perception of science, science communication, and social responsibilities of scientists. Over the next few months, I plan on writing a number of posts that detail these haphazard reflections in an attempt to consolidate some meaningful take-aways. Today, I will start with this fundamental question about science: should all scientific investigations have an implication?

Let’s get the easy stuff out of the way first. I’m not asking whether all science will have implications, because it invariably does. Even if you’re studying the most obscure thing, like how mussel clams produce glue to stick onto boats, the knowledge of the thing itself gives you an ability to predict and manipulate it, and that is an useful implication. Whether anybody else cares, or should care, is a different question. The question I’m asking today is not that, but rather: should we approach scientific investigations with a downstream implication in mind? This is a question I get a lot, and one that I ask myself quite often: what is the point of this particular line of research? If you work in the biological sciences, you probably know exactly what I’m talking about, and are no doubt well-versed in coming up with creative implications for your next grant, or perhaps your lab is established enough that you have a cookie-cutter template. In these cases, it’s almost always linked to diseases: how a gene, protein, or biological process may impact our understanding of a debilitating disease.

Why is this at all controversial, you might ask? After all, it is pretty reasonable that when we apply for grants that we justify what possible good it does for society, especially when we’re using tax money. Furthermore, engaging the public with what they care about - real life consequences - should only increase their support and interest in science. Ultimately, having some practical thoughts in mind about how your science might actually be useful is a good thing, right? Here, I would argue that searching and tacking on implications for a planned project much earlier than when we know what it could possibly imply has two serious negative consequences for science itself: the first social, the second scientific. I will situate both of these points in the context of neuroscientific research, but I believe they apply to biological research in general, though it seems that physical and computer science do not suffer as much from it (possibly a correlate of how much public money is dedicated to it).

The reviewer knows the game, the public does not.

It is important for the public to understand what science has meant for the

world in the last 200 years or so, this is not really up for debate, because

it is the truth. Almost every single artifact we interact with on a daily

basis is the result of some scientific understanding of our world, manipulated

and coaxed into being useful through engineering or clinical applications. The

invisible fact that we live on average twice as long as we did a little over

100 years ago is also a product of science. But consider this: out of those

discoveries that aid in extending our life, how many of them were thought to

have such an implications when the projects were proposed? Furthermore, how

many similar projects and discoveries did not ultimately contribute directly

to this particular consequence, but could have very well be argued as such?

For this, I turn to the classic example of green fluorescent

protein (GFP), because of how well it demonstrates the point and

how absurdly and unimaginably different the discovery and the product are. GFP

itself was discovered in the 60s, in a particular type of jellyfish that

glows. Why someone was studying this thing, I really have no idea, though it’s

probably because they thought glowing jellyfish were fascinating (they are).

You can, of course, find accounts of this discovery via a simple Google

search, so I will not elaborate here. What I can tell you is that they did

not study GFP because of its tremendous implications in biological sciences,

and by extension, health sciences. It turns out, GFP and fluorescence

microscopy are so ubiquitous in science these days that even first year

undergrads get to play with it. Put your hand up if you honestly think you

could’ve foreseen this coming in the 60s, I’d like to talk to you about

investment strategies.

So why is this an issue for the public? Because selling something based on its potential implications that hit a soft spot in the heart of the general public, while not outrightly dishonest, does not generate good will, especially when they (almost always) fail to achieve the proposed implication. “But science is slow, and it takes patience!” you might say. True, though that is completely besides the point. After all, how many grant proposals, or interviews where a scientist talks about the potential impact of a discovery, actually state an estimated timeline where we will arrive at the projected implications, or even what the specific implication is? I read an article recently lamenting the poor return on investment of NIH money on neuroscience in the last decade or so, when it was hailed that such an injection of funding should push us forward in curing, or at least understanding, all kinds of mental and neurological disorders. How many of those have we cured or understood from beginning to end? If it’s not zero, then it’s definitely countable on one hand. From a scientific point of view, that is of course completely reasonable, if not expected. Science IS slow, and it DOES take patience. It’s not a magic-circle where you can throw money in and get cures out. Over time, all the talk about implication this and implication that, while we under-deliver over and over again to the public, will and has eroded the public’s perception of science. This is in part due to a lack of proper education about the scientific process itself, thus setting up an unrealistic expectation on what science delivers. Scientific research is not the same as industrial research, but it’s starting to feel like it more and more, and scientists are not helping our own case when we suggest implications that we know are so far off in the future we might as well not state it. Alas, at the end of the day, it is something we are all guilty of, because it’s part of the “game” to get funding and get published. Until the incentives change drastically, it may be the only way to keep doing science. It is simply recommended that, in the process of playing the game for our grant or paper reviewers, that we do not fool ourselves, nor the good people of the world into thinking that what we promised might actually be delivered in any reasonable amount of time. And it could serve us well to be plainly and painfully honest sometime to just say: “well, I can’t really tell you what the implication is, and I don’t really care if there is one. But rest assured that when we figure this thing out from top to bottom, someone somewhere WILL come up with something useful to do with it.”

Roadmaps limit imagination

Working with future implications in mind is counter to the concept of science

itself. What do I mean by that? Science, to me, is the undirected examination

of a phenomenon or object in nature for the sole purpose of understanding it

and satisfying a weird curiosity. Sure, this is just semantics, and what I

personally think science is or ought to be really doesn’t matter as long as

it’s productive. Therefore, I would further argue that goal-directed research,

for the most part, stifles innovation by removing parallel thinking. Let’s say

our goal is to cure disease X, and methods A, B, and C are the most promising

so far (at least they were published in Nature, Science, and Cell), why in the

world would I try anything else if I, too, want to cure disease X, and also be

the first? This is not to say that objective-oriented research isn’t useful,

it absolutely is. It’s probably more useful than basic science research

since that’s what actually makes discoveries applicable. Even in basic science

itself, goal-directed efforts, like the Allen Brain Institute, has achieved

plenty in a very short amount of time. But the problem arises when we slowly

and obliviously replace all of basic science research with engineering and

industrial research, not in name but in essence, because soon enough, we won’t

have new discoveries to capitalize on. No one is explicitly doing this, which

is what makes it all the more insidious - all it takes is to see where the

money flows, and the money flows to projects with the best implications - see

where I’m going with this?

So how do we combat this? Christof Koch spoke at UCSD recently about the Allen Brain Institute. Afterwards, I asked him what roles does he see academic research labs playing in the future, in light of the fact that the ABI was able to accomplish so much in so little time and with so little money (relatively speaking). His answer, while unsurprising, injected some hope in academic research. Basically, he said that university labs should keep doing what they do, which is exerting parallel efforts at problems so that we can still come up with new discoveries, like how DNA randomly evolves through recombination. I would append to that in this way: we should direct half the public money towards a few of these unified and industrialized efforts, like the ABI, because they will inevitably have something to show for it. The rest of the money should be divided up basically equally, to pay for academic labs and researchers to look at whatever the hell they want to look it. In fact, to really promote science in youth, we have to encourage students to pursue projects bounded only by their imagination and curiosity, and not by practical utility. My ideal vision for this is basically some form of universal basic income where researchers get paid a living (but oh god not minimum) wage to work on whatever problem they want to. I hear France has some kind of life-time personal funding like that. After all, working on interesting problems and getting rich and famous at it seems a bit like getting your cake and eating it too, which is exactly what doesn’t make sense: academics making CEO money, or 20% of the labs getting 80% of the funding. I wonder what the actual statistics are on that…

Science is, for now, a public investment, just like infrastructure. And like infrastructure, it requires long-term vision and patience to really bare fruit. If we focus all of our energy and resources on projects that “have implications”, it misses the most valuable part of science: utility through serendipitous discoveries. Again, I’m not saying we should scrap goals , or targeted investigations that follow a clear line of pre-established ideas. I am simply suggesting that we should re-evaluate whether we over-promise to the public for the sake of existing in this system, and whether that has subdued true scientific innovation. If so, instead of accommodating to the shitty system, maybe we can do something to change it?